Home

Biography

Hello, I am Ziwei, currently a 4th-year Ph.D. candidate in computer vision and robotics from the University of Toronto. I studied at the Toronto Robotics and AI Lab supervised by Prof. Steven L. Waslander. I am affiliated with the Institute for Aerospace Study (UTIAS), UToronto Robotics Institute and the Vector Institute.

I was fortunate to cooperated with researchers at Niantic Labs in London, UK, in 2024, Microsoft Research Asia (MSRA) in 2023, and Megvii Research (Face++) in 2021 as a research intern.

In 2021 and 2018, I received both my M.Sci. and B.E. at the Robotics Institute of Beihang University, Beijing, China, supervised by Prof. Wang Wei. In 2017, I visited the Intelligent Robot Laboratory at Tsukuba University, Japan as a research assistant supervised by Prof. Akihisa Ohya.

Please refer to my [CV] for more details.

I will graduate before August 2025 and am actively seeking job opportunities in World Models/Generative AI, Embodied AI/Robotics, AR/XR, and other related fields. Please feel free to contact me via email. Thank you!

Research Interests

My research focuses on the intersection of 3D vision, generative models, and computer graphics. I am interested in building a world model, which is essential for enabling machines to perceive, understand, and interact with real-world 3D environments. It has broad applications in physical embodied AI, including robotics and augmented reality. More specifically:

3D Representations for Objects and Scenes: Gaussian Splattings and Implicit representations (e.g., NeRF, SDF), with applications in Simultaneous Localization and Mapping (SLAM), objects reconstruction, and pose estimation.

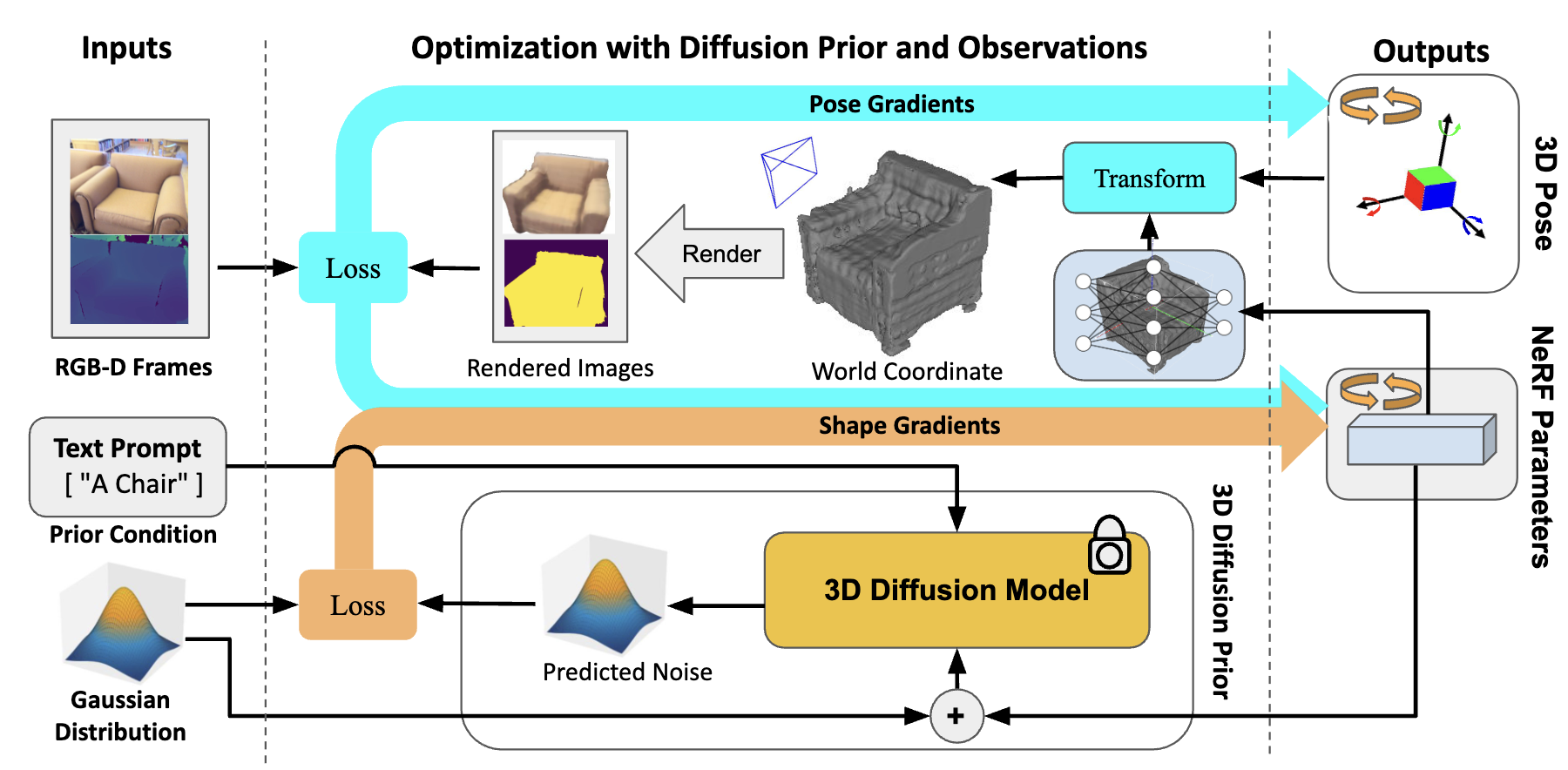

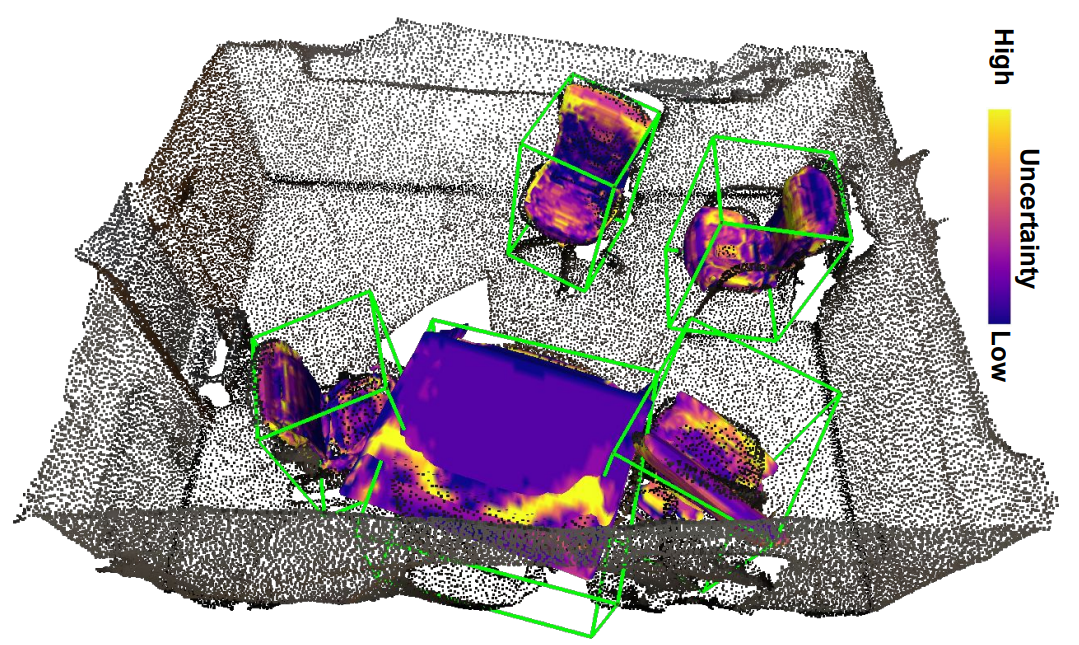

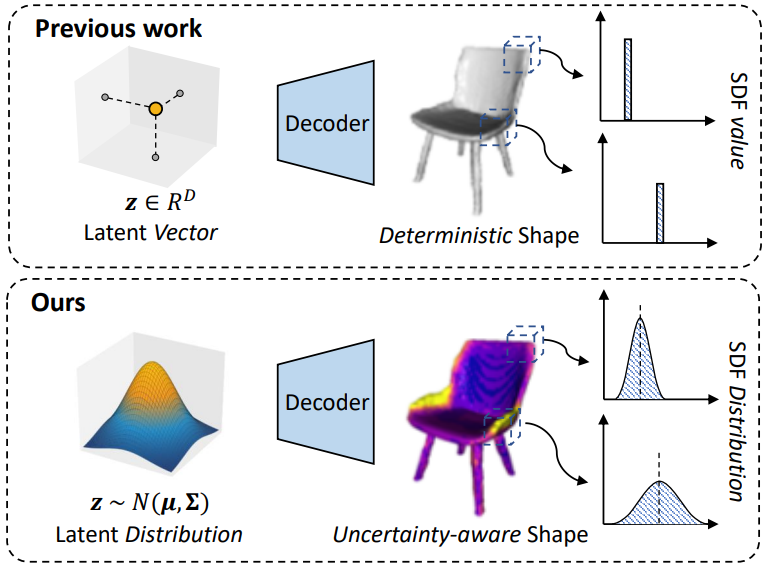

3D Generative Modellings: Learning to generate 3D representations from images using diffusion models; Solving ill-posed inverse problems by leveraging generative models as priors and quantifying uncertainty.

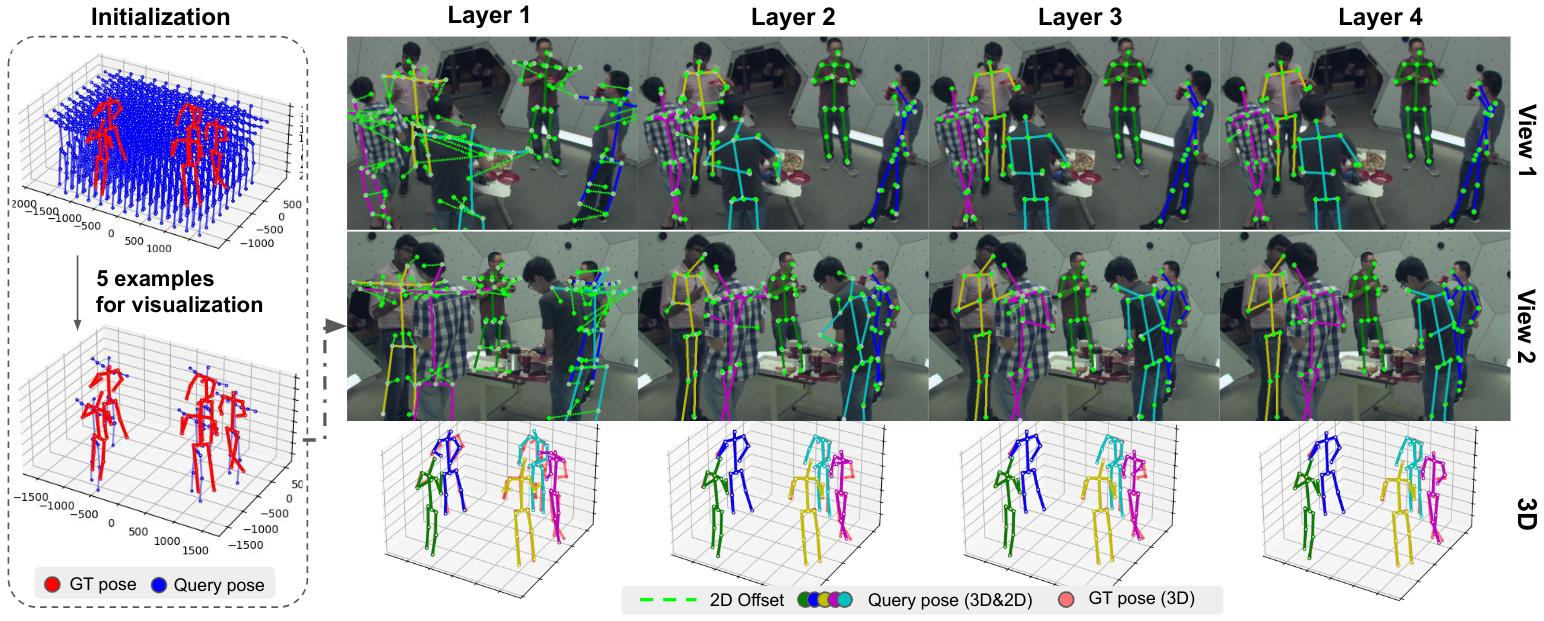

Learning for 3D Perception Tasks: Developing multi-view learning architectures (e.g., Transformers) for 3D perception tasks such as human pose estimation, while enhancing generalization capabilities.

If you are interested in any of these topics, please don’t hesitate to reach out to me for discussion and potential collaboration.

News

[3/2025] A paper on 3D Gaussian Splatting with Diffusion Models, in collaboration with Niantic Labs, has been submitted for review and will be released on arXiv soon.

[12/2024] I joined Niantic Labs as a research intern in London, UK, in June 2024. I had a wonderful time there and completed the internship in December 2024.

[9/2024] A paper on general object-level mapping with a 3D diffusion model has been accepted as a Spotlight presentation at CoRL 24, held in Munich, Germany.

[2/2024] A paper for 3D human pose estimation with transformers is accepted by CVPR 24, cooperated with Microsoft Research Asia, held in Seattle, USA.

[1/2024] A paper for object-level mapping (3D pose & shape) with uncertainty is accepted by ICRA 24, held in Yokohama, Japan.

[10/2023] A paper for 3D object reconstruction with uncertainty was accepted by WACV 2024, held in Hawaii, USA.

[2/2022] A paper for monocular object-level SLAM with quadrics (SO-SLAM) was accepted by IEEE RA-L and presented at ICRA 2022.

[9/2021] I joined the Toronto Robotics and AI Lab at the University of Toronto to pursue a Ph.D. in computer vision and robotics.

…

Academic Service

I am serving as a reviewer for:

- Journal: The International Journal of Robotics Research (IJRR), IEEE Robotics and Automation Letters (RA-L)

- Conference: CVPR 2023-2024; ECCV 2024; NeurIPS 2024; ICLR 2025; ICML 2025; ICRA 2023-2024; WACV 2024-2025.

Selected Publications

Please see my Google Scholar Page for the latest updates.

3D with Generative Models

| Toward General Object-level Mapping from Sparse Views with 3D Diffusion Priors |

| Uncertainty-aware 3D Object-Level Mapping with Deep Shape Priors |

| Multi-view 3D Object Reconstruction and Uncertainty Modelling with Neural Shape Prior |

3D Human Pose

| Multiple View Transformers for 3D Human Pose Estimation |

3D Object-level SLAM

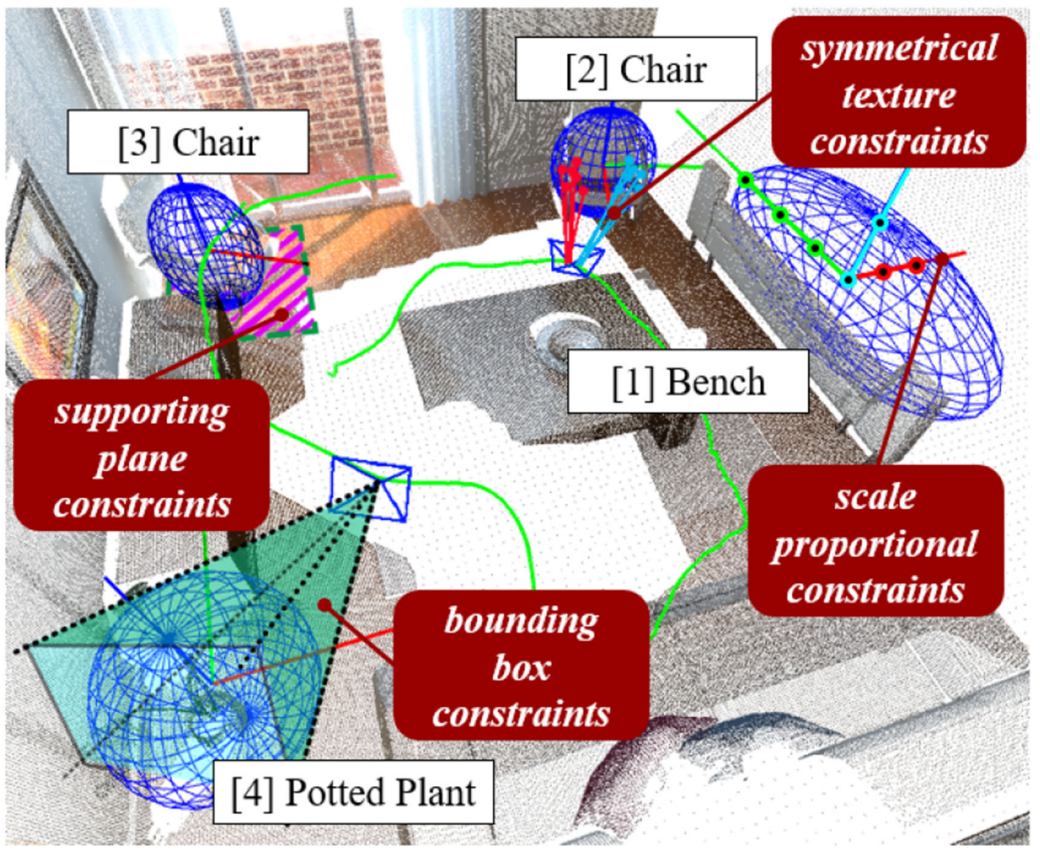

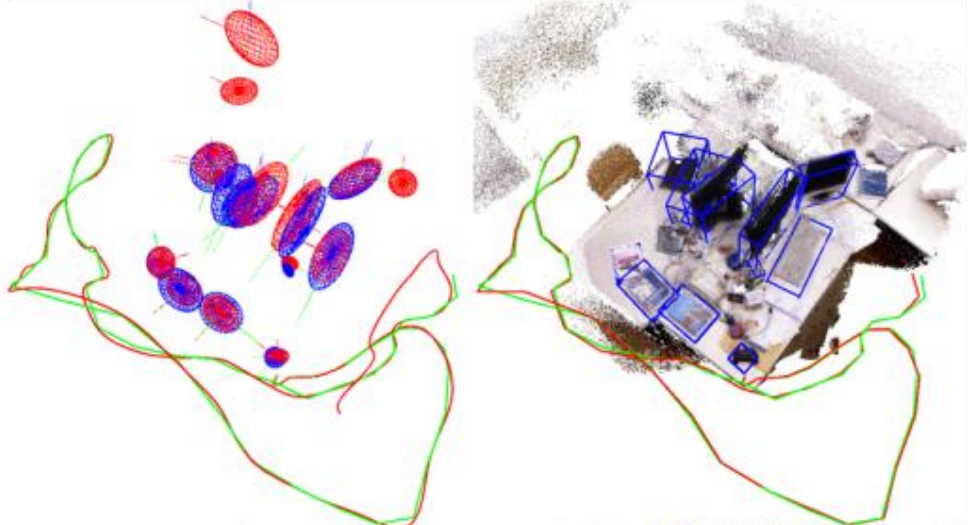

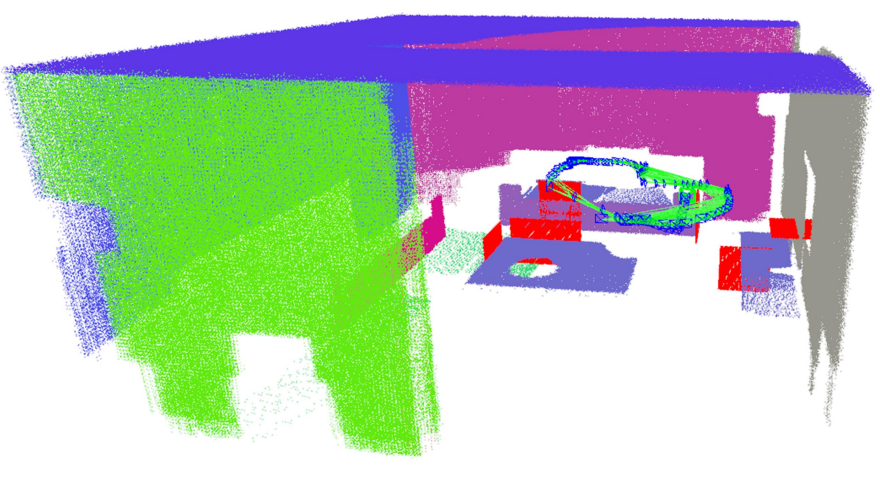

| SO-SLAM: Semantic Object SLAM with Scale Proportional and Symmetrical Texture Constraints |

| RGB-D Object SLAM using Quadrics for Indoor Environments |

| Object-oriented SLAM using Quadrics and Symmetry Properties for Indoor Environments |

Mapping, Localization, SLAM

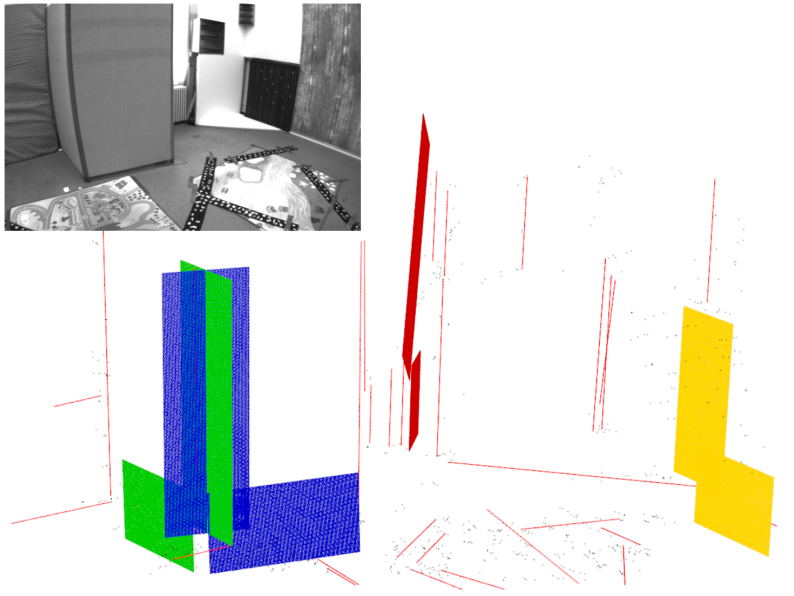

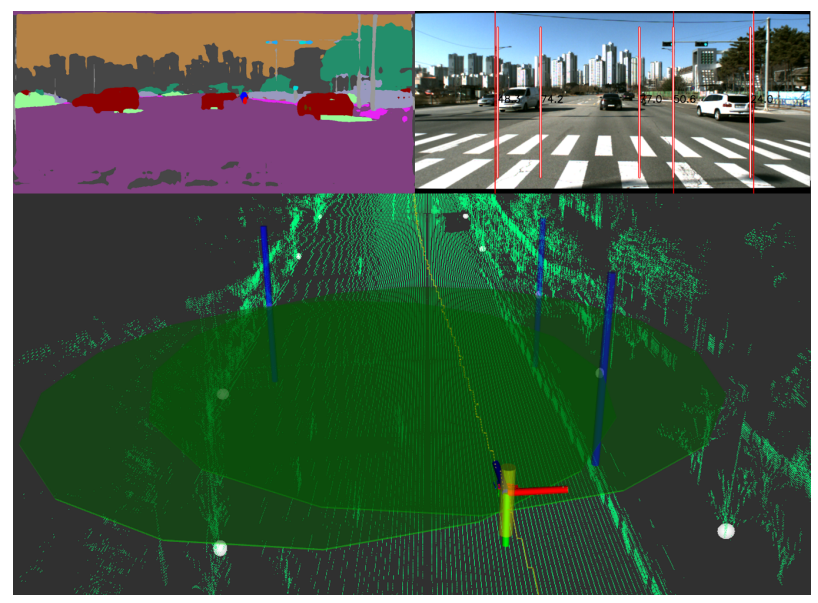

| Stereo plane slam based on intersecting lines |

| Point-Plane SLAM Using Supposed Planes for Indoor Environments |

| Coarse-To-Fine Visual Localization Using Semantic Compact Map |

Robots Navigation

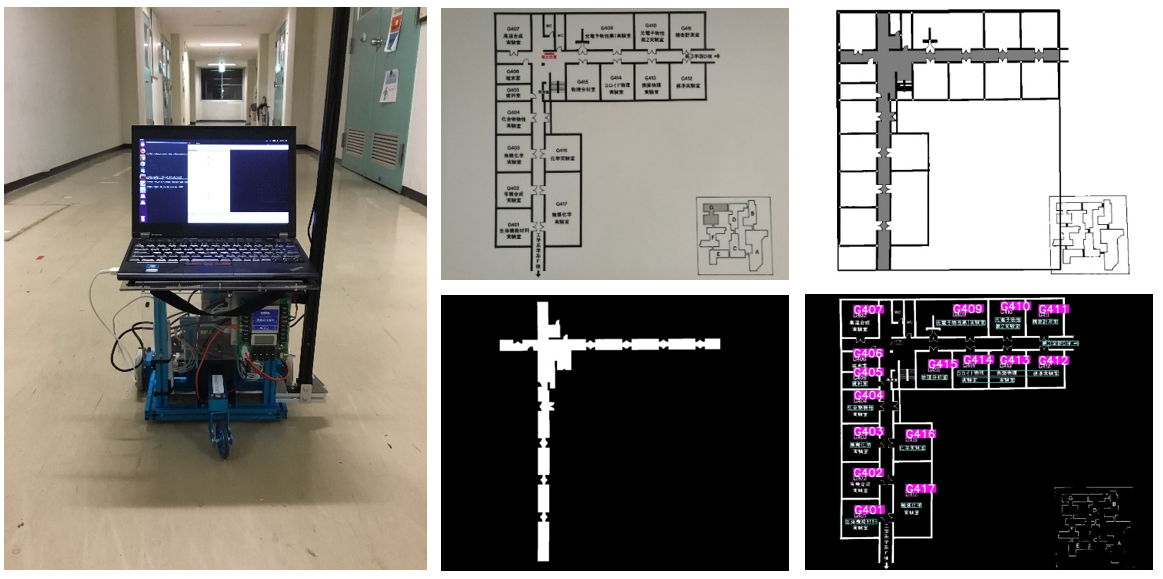

| Semantic Navigation for Indoor Robots with Corridor Map Prior |

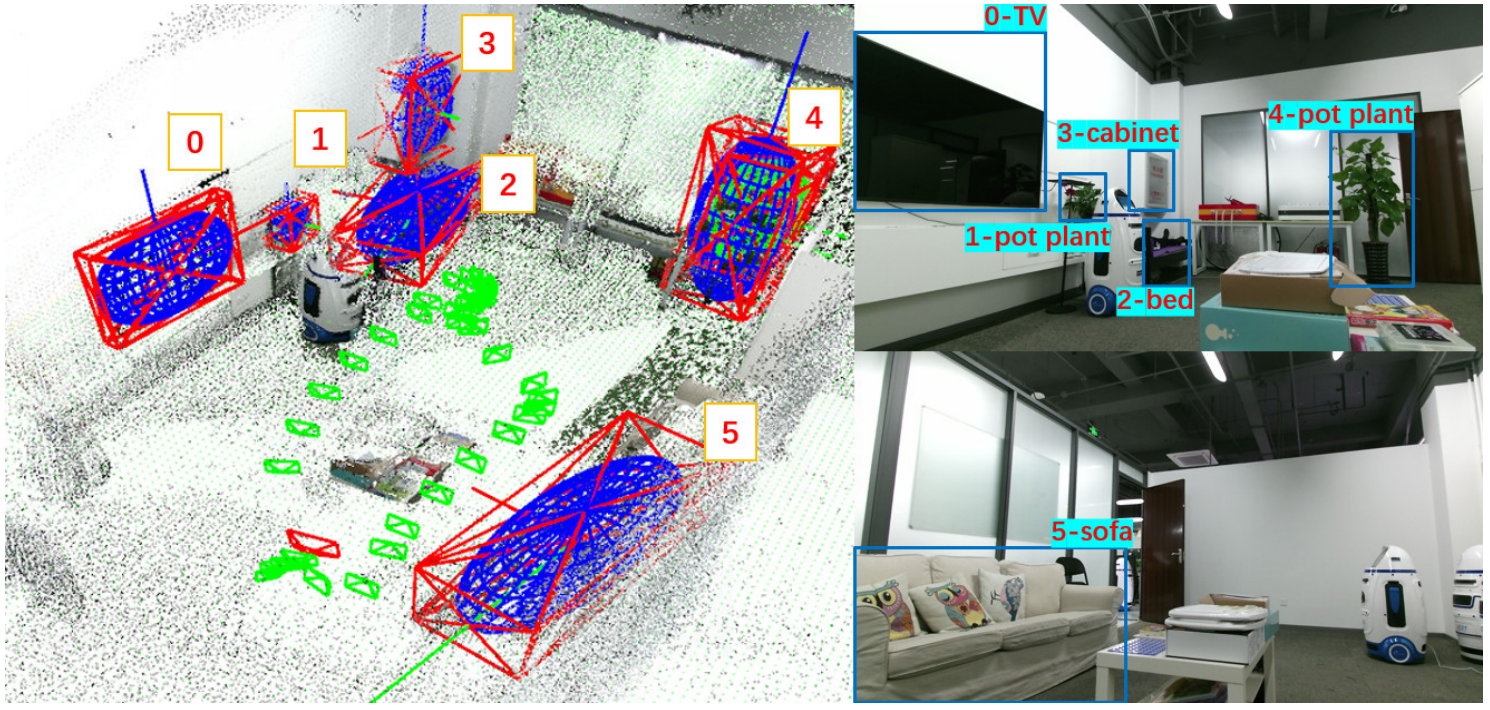

| Object Semantic Grid Mapping with 2D LiDAR and RGB-D Camera for Domestic Robot Navigation |

Hands-On Experience with Real-World Robots

|

I have been a huge enthusiast of robotics throughout my college years. I was the vice captain of the Robot Team at Beihang University, participating in the Robocon National Robotics Competition (2016-2018). I designed a rotorcraft equipped with a master-slave mechanical arm (2015). I had the opportunity to work with various robots during both my bachelor’s and master’s projects (2018, 2021). I served as the president of the Robotics Student Association at Beihang University (2015-2016).